2014-07-01 »

The Curse of Smart People

A bit over 3 years ago, after working for many years at a series of startups (most of which I co-founded), I disappeared through a Certain Vortex to start working at a Certain Large Company which I won't mention here. But it's not really a secret; you can probably figure it out if you bing around a bit for my name.

Anyway, this big company that now employs me is rumoured to hire the smartest people in the world.

Question number one: how true is that?

Answer: I think it's really true. A suprisingly large fraction of the smartest programmers in the world do work here. In very large quantities. In fact, quantities so large that I wouldn't have thought that so many really smart people existed or could be centralized in one place, but trust me, they do and they can. That's pretty amazing.

Question number two: but I'm sure they hired some non-smart people too, right?

Answer: surprisingly infrequently. When I went for my job interview there, they set me up for a full day of interviewers (5 sessions plus lunch). I decided that I would ask a few questions of my own in these interviews, and try to guess how good the company is based on how many of the interviewers seemed clueless. My hypothesis was that there are always some bad apples in any medium to large company, so if the success rate was, say, 3 or 4 out of 5 interviewers being non-clueless, that's pretty good.

Well, they surprised me. I had 5 out of 5 non-clueless interviewers, and in fact, all of them were even better than non-clueless: they impressed me. If this was the average smartness of people around here, maybe the rumours were really true, and they really had something special going on.

(I later learned that my evil plan and/or information about my personality may have been leaked to the recruiters who may have intentionally set me up with especially clueful interviewers to avoid the problem, but this can neither be confirmed nor denied.)

Anyway, I continue to be amazed at the overall smartness of people at this place. Overall, very nearly everybody, across the board, surprises or impresses me with how smart they are.

Pretty great, right?

Yes.

But it's not perfect. Smart people have a problem, especially (although not only) when you put them in large groups. That problem is an ability to convincingly rationalize nearly anything.

Everybody rationalizes. We all want the world to be a particular way, and we all make mistakes, and we all want to be successful, and we all want to feel good about ourselves.

We all make decisions for emotional or intuitive reasons instead of rational ones. Some of us admit that. Some of us think using our emotions is better than being rational all the time. Some of us don't.

Smart people, computer types anyway, tend to come down on the side of people who don't like emotions. Programmers, who do logic for a living.

Here's the problem. Logic is a pretty powerful tool, but it only works if you give it good input. As the famous computer science maxim says, "garbage in, garbage out." If you know all the constraints and weights - with perfect precision - then you can use logic to find the perfect answer. But when you don't, which is always, there's a pretty good chance your logic will lead you very, very far astray.

Most people find this out pretty early on in life, because their logic is imperfect and fails them often. But really, really smart computer geek types may not ever find it out. They start off living in a bubble, they isolate themselves because socializing is unpleasant, and, if they get a good job straight out of school, they may never need to leave that bubble. To such people, it may appear that logic actually works, and that they are themselves logical creatures.

I guess I was lucky. I accidentally co-founded what turned into a pretty successful startup while still in school. Since I was co-running a company, I found out pretty fast that I was wrong about things and that the world didn't work as expected most of the time. This was a pretty unpleasant discovery, but I'm very glad I found it out earlier in life instead of later, because I might have wasted even more time otherwise.

Working at a large, successful company lets you keep your isolation. If you choose, you can just ignore all the inconvenient facts about the world. You can make decisions based on whatever input you choose. The success or failure of your project in the market is not really that important; what's important is whether it gets canceled or not, a decision which is at the whim of your boss's boss's boss's boss, who, as your only link to the unpleasantly unpredictable outside world, seems to choose projects quasi-randomly, and certainly without regard to the quality of your contribution.

It's a setup that makes it very easy to describe all your successes (project not canceled) in terms of your team's greatness, and all your failures (project canceled) in terms of other people's capriciousness. End users and profitability, for example, rarely enter into it. This project isn't supposed to be profitable; we benefit whenever people spend more time online. This project doesn't need to be profitable; we can use it to get more user data. Users are unhappy, but that's just because they're change averse. And so on.

What I have learned, working here, is that smart, successful people are cursed. The curse is confidence. It's confidence that comes from a lifetime of success after real success, an objectively great job, working at an objectively great company, making a measurably great salary, building products that get millions of users. You must be smart. In fact, you are smart. You can prove it.

Ironically, one of the biggest social problems currently reported at work is lack of confidence, also known as Impostor Syndrome. People with confidence try to help people fix their Impostor Syndrome, under the theory that they are in fact as smart as people say they are, and they just need to accept it.

But I think Impostor Syndrome is valuable. The people with Impostor Syndrome are the people who aren't sure that a logical proof of their smartness is sufficient. They're looking around them and finding something wrong, an intuitive sense that around here, logic does not always agree with reality, and the obviously right solution does not lead to obviously happy customers, and it's unsettling because maybe smartness isn't enough, and maybe if we don't feel like we know what we're doing, it's because we don't.

Impostor Syndrome is that voice inside you saying that not everything is as it seems, and it could all be lost in a moment. The people with the problem are the people who can't hear that voice.

2014-07-03 »

"The best grass in my yard is the grass @googlefiber planted after digging to install fiber. Truly dominating all aspects of my life." – twitter

#throughgrass

2014-07-06 »

Well that escalated quickly.

http://www.cnet.com/news/googles-brin-we-want-to-end-individual-car-ownership/

The good news is that I must have improved as a writer, or at least gotten very lucky, because so far my little article doesn't seem to have been quoted or misquoted in such a way as to make me or anyone else look like an idiot.

2014-07-07 »

In other news, if you don't post flattering pictures of yourself to the Internet, they will find non-flattering pictures.

2014-07-08 »

"Google company culture delusional says employee"

Well okay, that's not what I said... but.

2014-07-09 »

The Curse of Vicarious Popularity

I had already intended for this next post to be a discussion of why people seem to suddenly disappear after they go to work for certain large companies. But then, last week, I went and made an example of myself.

Everything started out normally (the usual bit of attention on news.yc). It then progressed to a mention on Daring Fireball, which was nice, but okay, that's happened before. A few days later, though, things started going a little overboard, as my little article about human nature got a company name attached to it and ended up quoted on Business Insider and CNet.

Now don't get me wrong, I like fame and fortune as much as the next person, but those last articles crossed an awkward threshold for me. I wasn't quoted because I said something smart; I was quoted because what I said wasn't totally boring, and an interesting company name got attached. Suddenly it was news, where before it was not.

Not long after joining a big company, I asked my new manager - wow, I had a manager for once! - what would happen if I simply went and did something risky without getting a million signoffs from people first. He said something like this, which you should not quote because he was not speaking for his employer and neither am I: "Well, if it goes really bad, you'll probably get fired. If it's successful, you'll probably get a spot bonus."

Maybe that was true, and maybe he was just telling me what I, as a person arriving from the startup world, wanted to hear. I think it was the former. So far I have received some spot bonuses and no firings, but the thing about continuing to take risks is my luck could change at any time.

In today's case, the risk in question is... saying things on the Internet.

What I have observed is that the relationship between big companies and the press is rather adversarial. I used to really enjoy reading fake anecdotes about it at Fake Steve Jobs, so that's my reference point, but I'm pretty sure all those anecdotes had some basis in reality. After all, Fake Steve Jobs was a real journalist pretending to be a real tech CEO, so it was his job to know both sides.

There are endless tricks being played on everyone! PR people want a particular story to come out so they spin their press releases a particular way; reporters want more conflict so they seek it out or create it or misquote on purpose; PR people learn that this happens so they become even more absolutely iron-fisted about what they say to the press. There are classes that business people at big companies can take to learn to talk more like politicians. Ironically, if each side would relax a bit and stop trying so hard to manipulate the other, we could have much better and more interesting and less tabloid-like tech news, but that's just not how game theory works. The first person to break ranks would get too much of an unfair advantage. And that's why we can't have nice things.

Working at a startup, all publicity is good publicity, and you're the underdog anyway, and you're not publicly traded, so you can be pretty relaxed about talking to the press. Working at a big company, you are automatically the bad guy in every David and Goliath story, unless you are very lucky and there's an even bigger Goliath. There is no maliciousness in that; it's just how the story is supposed to be told, and the writers give readers what they want.

Which brings me back to me, and people like me, who just write for fun. Since I work at a big company, there are bunch of things I simply should not say, not because they're secret or there's some rule against saying them - there isn't, as far as I know - but because no matter what I say, my words are likely to be twisted and used against me, and against others. If I can write an article about Impostor Syndrome and have it quoted by big news organizations (to their credit, the people quoting it so far have done a good job), imagine the damage I might do if I told you something mean about a competitor, or a bug, or a missing feature, or an executive. Even if, or especially if, it were just my own opinion.

In the face of that risk - the risk of unintentionally doing a lot of damage to your friends and co-workers - most people just give up and stop writing externally. You may have noticed that I've greatly cut back myself. But I have a few things piling up that I've been planning to say, particularly about wifi. Hopefully it will be so technically complicated that I will scare away all those press people.

And if we're lucky, I'll get the spot bonus and not that other thing.

2014-07-11 »

The ex-entrepreneur decision making process: an anecdote

- If you are so smart why are twiddling around bits [...] for

breadcrumbs when you could have started your own company or gotten into

academia?

-- Someone on the Internet

in response to my post about smart people

That's an excellent question, actually. Okay, it could maybe have been phrased more politely, and I won't even begin to answer the question about academia, and in fact the breadcrumbs are prepared by professional chefs and are quite delicious. (I'm not even kidding.) But that question about starting my own company, that's a good question, because I've done that a few times before and this time I didn't.

The answer is that I had a conversation like this:

Me: I want to make wifi routers. I think they're all terrible, and they're all commoditized, and all competing on price. Someone needs to do to wifi routers what the iPhone did to phones.

Friend: That sounds fun. You should start a startup.

Me: Right, I was going to do that.

Friend: And then recruit me to it.

Me: Er, yeah, I will definitely get back to --

Friend: Or we could start it together! You should come to New York and convince me.

Me: Um. Well, you see, the first rule of starting a startup is you don't waste money on unnecessary --

Friend: I have an idea!

Me: Oh good.

Friend: I work at a big company. You should come interview here.

Me: I don't see how that will help at all.

Friend: It's simple. They pay for the flight, and you come for an interview, then stay for a few days and you convince me to work with you.

Me: That sounds a bit unethical.

Friend: Nonsense! It's totally fine as long as you promise to hear them out. That's all any job interview is, for either side. No commitments. You just need to come with an open mind.

Me: Hmm.

Friend: Plus if you get hired, I could get a bonus just for referring you!

Me: Oh, okay then.

So off I went to New York. tl;dr he got a referral bonus and I learned to never, ever go into any situation with an open mind.

Just kidding.

Okay, partly kidding.

But the real reason I decided not to start this startup was as follows: I couldn't figure out a business model for selling the world's most ultimate consumer wifi router. I knew how to build one, but not how to sell one. The problem was the ecosystem wasn't really there. A now-small subset of customers go to the store and buy a router based on brand name and price; they don't recognize most brand names and don't know what price is best, so they pick one randomly. Generally they will start by buying a terrible router for $30, and find out that it's terrible, and if they can afford it, they'll switch to a possibly-less-terrible one for $100+. But sucks to be them, because if they buy a really expensive one for, say, $200+, it's often even worse because it's bleeding edge experimental.

And anyway, that whole segment is not the one you want to be in, because nowadays ISPs all provide a wifi router along with every account, and most people take what they're given and don't bother to ever replace it. So if you want to change the world of wifi routers, you have to have a partnership with an ISP. But ISPs don't want to buy the best wifi router; they want to buy the cheapest wifi router that meets specifications. Sure, they'd probably pay a bit extra for a router with fewer bugs that causes fewer tech support calls... that's just good economics. But how will your tiny startup prove that your router causes fewer tech support calls? There's no chance.

And so the cycle continues: ISPs buy cheap-o routers and give them to you, and they crash and you fix them by turning them off and on again, and there's bufferbloat everywhere, and the Internet is a flakey place, and so on, forever.

I couldn't figure out how to fix that so I took a job doing something else.

2014-07-13 »

A Canadian newspaper's take on overconfidence and impostor syndrome. As a bonus, they mention EWB's "Failure Report."

2014-07-14 »

Wifi and the square of the radius

I have many things to tell you about wifi, but before I can tell you most of them, I have to tell you some basic things.

First of all, there's the question of transmit power, which is generally expressed in watts. You may recall that a watt is a joule per second. A milliwatt is 1/1000 of a watt. A transmitter generally can be considered to radiate its signal outward from a center point. A receiver "catches" part of the signal with its antenna.

The received signal power declines with the square of the radius from the transmit point. That is, if you're twice as far away as distance r, the received signal power at distance 2r is 1/4 as much as it was at r. Why is that?

Imagine a soap bubble. It starts off at the center point and there's a fixed amount of soap. As it inflates, the same amount of soap is stretched out over a larger and larger area - the surface area. The surface area of a sphere is 4 π r2.

Well, a joule of energy works like a millilitre of soap. It starts off at

the transmitter and gets stretched outward in the shape of a sphere. The

amount of soap (or energy) at one point on the sphere is proportional to

1 / 4 π r2.

Okay? So it goes down with the square of the radius.

A transmitter transmitting constantly will send out a total of one joule per second, or a watt. You can think of it as a series of ever-expanding soap bubbles, each expanding at the speed of light. At any given distance from the transmitter, the soap bubble currently at that distance will have a surface area of 4 π r2, and so the power will be proportional to 1 / that.

(I say "proportional to" because the actual formula is a bit messy and depends on your antenna and whatnot. The actual power at any point is of course zero, because the point is infinitely small, so you can only measure the power over a certain area, and that area is hard to calculate except that your antenna picks up about the same area regardless of where it is located. So although it's hard to calculate the power at any given point, it's pretty easy to calculate that a point twice as far away will have 1/4 the power, and so on. That turns out to be good enough.)

If you've ever done much programming, someone has probably told you that O(n^2) algorithms are bad. Well, this is an O(n^2) algorithm where n is the distance. What does that mean?

- 1cm -> 1 x

2cm -> 1/4 x

3cm -> 1/9 x

10cm -> 1/100 x

20cm -> 1/400 x

100cm (1m) -> 1/10000 x

10,000cm (100m) -> 1/100,000,000 x

As you get farther away from the transmitter, that signal strength drops fast. So fast, in fact, that people gave up counting the mW of output and came up with a new unit, called dBm (decibels times milliwatts) that expresses the signal power logarithmically:

- n dBm = 10n/10 mW

So 0 dBm is 1 mW, and 30 dBm is 1W (the maximum legal transmit power for most wifi channels). And wifi devices have a "receiver sensitivity" that goes down to about -90 dBm. That's nine orders of magnitude below 0; a billionth of a milliwatt, ie. a trillionth of a watt. I don't even know the word for that. A trilliwatt? (Okay, I looked it up, it's a picowatt.)

Way back in university, I tried to build a receiver for wired modulated signals. I had no idea what I was doing, but I did manage to munge it, more or less, into working. The problem was, every time I plugged a new node into my little wired network, the signal strength would be cut down by 1/n. This seemed unreasonable to me, so I asked around: what am I doing wrong? What is the magical circuit that will let me split my signal down two paths without reducing the signal power? Nobody knew the answer. (Obviously I didn't ask the right people :))

The answer is, it turns out, that there is no such magical circuit. The answer is that 1/n is such a trivial signal strength reduction that essentially, on a wired network, nobody cares. We have RF engineers building systems that can handle literally a 1/1000000000000 (from 30 dBm to -90 dBm) drop in signal. Unless your wired network has a lot of nodes or you are operating it way beyond distance specifications, your silly splitter just does not affect things very much.

In programming terms, your runtime is O(n) + O(n^2) = O(n + n^2) = O(n^2). You don't bother optimizing the O(n) part, because it just doesn't matter.

(Update 2014/07/14: The above comment caused a bit of confusion because it talks about wired networks while the rest of the article is about wireless networks. In a wireless network, people are usually trying to extract every last meter of range, and a splitter is a silly idea anyway, so wasting -3 dB is a big deal and nobody does that. Wired networks like I was building at the time tend to have much less, and linear instead of quadratic, path loss and so they can tolerate a bunch of splitters. For example, good old passive arcnet star topology, or ethernet-over-coax, or MoCA, or cable TV.)

There is a lot more to say about signals, but for now I will leave you with this: there are people out there, the analog RF circuit design gods and goddesses, who can extract useful information out of a trillionth of a watt. Those people are doing pretty amazing work. They are not the cause of your wifi problems.

2014-07-17 »

I've been trying out Apple Maps as I walk around Oslo and Dublin. It's actually not bad.

It makes severe mistakes with things like the actual outline of parks (most of them are only coloured green for half the actual park space) but is otherwise doing okay. If I search for museums, I get museums in Oslo. The "instant search" results match up exactly with the non-instant search results. The addresses I've searched for seem to have been accurate.

It also has cool features like not being slow, not forgetting my location and search terms if I exit and reload the app, loading tiles faster on slow networks (I'm on T-Mobile's free+slow international roaming thingy), caching tiles for longer, prefetching tiles that are outside my viewport, displaying the names of important landmarks (useful) instead of landmarks I've visited previously (annoying), remembering my recent search terms, and telling me which way I'm walking (twirly compass view). The UI has all options readily visible rather than triggered by undiscoverable swipes, drags, and slides. It uses Yelp for things like restaurant search, which is convenient because that's what I use for restaurant search.

I had imagined they would probably especially suck outside the U.S. (since the map accuracy is bad enough inside the U.S.) but so far, not so much.

In fairness, they have no transit, biking, or walking directions, all of which I've needed while on this trip (and I switched to Google Maps to obtain). And I have so little trust for their data accuracy that I double check everything with Google Maps just to make sure. So they certainly don't have a home run yet. But they're definitely not just fooling around.

I love competition.

2014-07-18 »

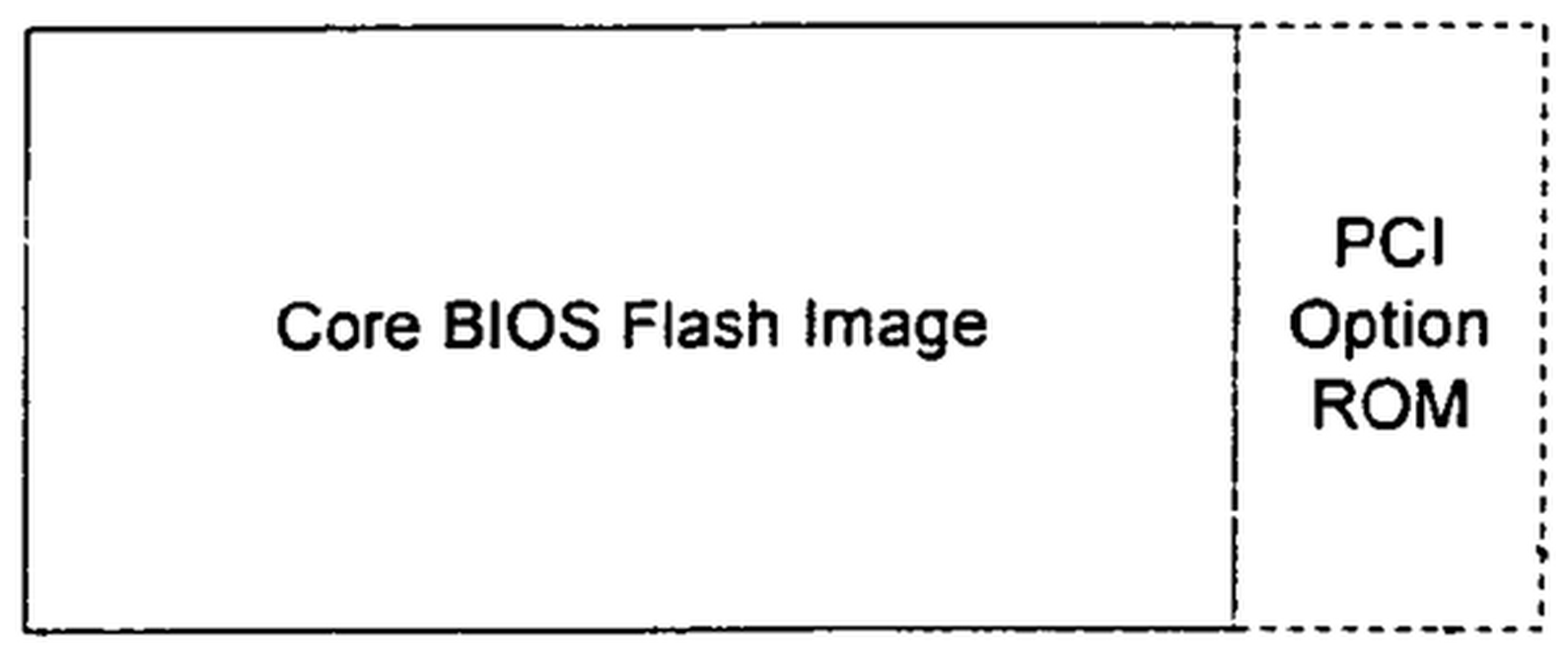

You've got to be kidding me. Supposedly legit BIOS extension, included by the manufacturer in many laptops, hijacks Windows boot process then updates itself using non-encrypted HTTP.

http://securelist.com/analysis/publications/58278/absolute-computrace-revisited/

I didn't like non-open-source firmware before, but... honestly.

2014-07-19 »

Wifi maximum range, lies, and the signal to noise ratio

Last time we talked about how wifi signals cross about 12 orders of magnitude in terms of signal power, from +30dBm (1 watt) to -90dBm (1 picowatt). I mentioned my old concern back in school about splitters causing a drop to 1/n of the signal on a wired network, where n is the number of nodes, and said that doesn't matter much after all.

Why doesn't it matter? If you do digital circuits for a living, you are familiar with the way digital logic works: if the voltage is over a threshold, say, 1.5V, then you read a 1. If it's under the threshold, then you read a 0. So if you cut all the voltages in half, that's going to be a mess because the threshold needs to get cut in half too. And if you have an unknown number of nodes on your network, then you don't know where the threshold is at all, which is a problem. Right?

Not necessarily. It turns out analog signal processing is - surprise! - not like digital signal processing.

ASK, FSK, PSK, QAM

Essentially, in receiving an analog signal and converting it back to digital, you want to do one of three things: - see if the signal power is over/under a threshold ("amplitude shift keying" or ASK) - or: see if the signal is frequency #0 or frequency #1 ("frequency shift keying" or FSK) - or: fancier FSK-like schemes such as PSK or QAM (look it up yourself :)).

Realistically nowadays everyone does QAM, but the physics are pretty much the same for FSK and it's easier to explain, so let's stick with that.

But first, what's wrong with ASK? Why toggle between two frequencies (FSK) when you can just toggle one frequency on and off (ASK)? The answer comes down mainly to circuit design. To design an ASK receiver, you have to define a threshold, and when the amplitude is higher than the threshold, call it a 1, otherwise call it a 0. But what is the threshold? It depends on the signal strength. What is the signal strength? The height of a "1" signal. How do we know whether we're looking at a "1" signal? It's above the threshold ... It ends up getting tautological.

The way you implement it is to design an "automatic gain control" (AGC) circuit that amplifies more when too few things are over the threshold, and less when too many things are over the threshold. As long as you have about the same number of 1's and 0's, you can tune your AGC to do the right thing by averaging the received signal power over some amount of time.

In case you don't have an equal number of 1's and 0's, you can fake it with various kinds of digital encodings. (One easy encoding is to split each bit into two halves and always flip the signal upside down for the second half, producing a "balanced" signal.)

So, you can do this of course, and people have done it. But it just ends up being complicated and fiddly. FSK turns out to be much easier. With FSK, you just build two circuits: one for detecting the amplitude of the signal at frequency f1, and one for detecting the amplitude of the signal at frequency f2. It turns out to be easy to design analog circuits that do this. Then you design a "comparator" circuit that will tell you which of two values is greater; it turns out to be easy to design that too. And you're done! No trying to define a "threshold" value, no fine-tuned AGC circuit, no circular reasoning. So FSK and FSK-like schemes caught on.

SNR

With that, you can see why my original worry about a 1/n signal reduction from cable splitters didn't matter. As long as you're using FSK, the 1/n reduction doesn't mean anything; your amplitude detector and comparator circuits just don't care about the exact level, essentially. With wifi, we take that to the extreme with tiny little FSK-like signals down to a picowatt or so.

But where do we stop? Why only a picowatt? Why not even smaller?

The answer is, of course, background noise. No signal exists in perfect isolation, except in a simulation (and even in a simulation, the limitations of floating point accuracy might cause problems). There might be leftover bits of other people's signals transmitted from far away; thermal noise (ie. molecules vibrating around which happen to be at your frequency); and amplifier noise (ie. inaccuracies generated just from trying to boost the signal to a point where your frequency detector circuits can see it at all). You can also have problems from other high-frequency components on the same circuit board emitting conflicting signals.

The combination of limits from amplifier error and conflicting electrical components is called the receiver sensitivity. Noise arriving from outside your receiver (both thermal noise and noise from interfering signals) is called the noise floor. Modern circuits - once properly debugged, calibrated, and shielded - seem to be good enough that receiver sensitivity is not really your problem nowadays. The noise floor is what matters.

It turns out, with modern "low-noise amplifier" (LNA) circuits, we can amplify a weak signal essentially as much as we want. But the problem is... we amplify the noise along with it. The ratio between signal strength and noise turns out to be what really matters, and it doesn't change when you amplify. (Other than getting slightly worse due to amplifier noise.) We call that the signal to noise ratio (SNR), and if you ask an expert in radio signals, they'll tell you it's one of the most important measurements in analog communications.

A note on SNR: it's expressed as a "ratio" which means you divide the signal strength in mW by the noise level in mW. But like the signal strength and noise levels, we normally want to express the SNR in decibels to make it more manageable. Decibels are based on logarithms, and because of the way logarithms work, you subtract decibels to get the same effect as dividing the original values. That turns out to be very convenient! If your noise level is -90dBm and your signal is, say, -60dBm, then your SNR is 30dB, which means 1000x. That's awfully easy to say considering how complicated the underlying math is. (By the way, after subtracting two dBm values we just get plain dB, for the same reason that if you divide 10mW by 2mW you just get 5, not 5mW.)

The Shannon Limit

So, finally... how big does the SNR need to be in order to be "good"? Can you just receive any signal where SNR > 1.0x (which means signal is greater than noise)? And when SNR < 1.0x (signal is less than noise), all is lost?

Nope. It's not that simple at all. The math is actually pretty complicated, but you can read about the Shannon Limit on wikipedia if you really want to know all the details. In short, the bigger your SNR, the faster you can go. That makes a kind of intuitive sense I guess.

(But it's not really all that intuitive. When someone is yelling, can they talk faster than when they're whispering? Perhaps it's only intuitive because we've been trained to notice that wifi goes faster when the nodes are closer together.)

The Shannon limit even calculates that you can transfer some data even when the signal power is lower than the noise, which seems counterintuitive or even impossible. But it's true, and the global positioning system (GPS) apparently actually does this, and it's pretty cool.

The Maximum Range of Wifi is Unchangeable

So that was all a very long story, but it has a point. Wifi signal strength is fundamentally limited by two things: the regulatory transmitter power limit (30dBm or less, depending on the frequency and geography), and the distance between transmitter and receiver. You also can't do much about background noise; it's roughly -90dBm or maybe a bit worse. Thus, the maximum speed of a wifi link is fixed by the laws of physics. Transmitters have been transmitting at around the regulatory maximum since the beginning.

So how, then, do we explain the claims that newer 802.11n devices have "double the range" of the previous-generation 802.11g devices?

Simple: they're marketing lies. 802.11g and 802.11n have exactly the same maximum range. In fact, 802.11n just degrades into 802.11g as the SNR gets worse and worse, so this has to be true.

802.11n is certainly faster at close and medium range. That's because 802.11g tops out at an SNR of about 20dB. That is, the Shannon Limit says you can go faster when you have >20dB, but 802.11g doesn't try; technology wasn't ready for it at the time. 802.11n can take advantage of that higher SNR to get better speeds at closer ranges, which is great.

But the claim about longer range, by any normal person's definition of range, is simply not true.

Luckily, marketing people are not normal people. In the article I linked above they explain how. Basically, they define "twice the range" as a combination of "twice the speed at the same distance" and "the same speed at twice the distance." That is, a device fulfilling both criteria has double the range as an original device which fulfills neither.

It sounds logical, but in real life, that definition is not at all useful. You can do it by comparing, say, 802.11g and 802.11n at 5ft and 10ft distances. Sure enough, 802.11n is more than twice as fast as 802.11g at 5ft! And at 10ft, it's still faster than 802.11g at 5ft! Therefore, twice the range. Magic, right? But at 1000ft, the same equations don't work out. Oddly, their definition of "range" does not include what happens at maximum range.

I've been a bit surprised at how many people believe this "802.11n has twice the range" claim. It's obviously great for marketing; customers hate the limits of wifi's maximum range, so of course they want to double it, or at least increase it by any nontrivial amount, and they will pay money for a new router if it can do this. As of this writing, even wikipedia's table of maximum ranges says 802.11n has twice the maximum range of 802.11g, despite the fact that anyone doing a real-life test could easily discover that this is simply not the case. I did the test. It's not the case. You just can't cheat Shannon and the Signal to Noise Ratio.

...

Coming up next, some ways to cheat Shannon and the Signal to Noise Ratio.

2014-07-27 »

Wifi, Interference and Phasors

Before we get to the next part, which is fun, we need to talk about phasors. No, not the Star Trek kind, the boring kind. Sorry about that.

If you're anything like me, you might have never discovered a use for your trigonometric identities outside of school. Well, you're in luck! With wifi, trigonometry, plus calculus involving trigonometry, turns out to be pretty important to understanding what's going on. So let's do some trigonometry.

Wifi modulation is very complicated, but let's ignore modulation for the moment and just talk about a carrier wave, which is close enough. Here's your basic 2.4 GHz carrier:

- A cos (ω t)

Where A is the transmit amplitude and ω = 2.4e9 (2.4 GHz). The wavelength, λ, is the speed of light divided by the frequency, so:

- λ = c / ω = 3.0e8 / 2.4e9 = 0.125m

That is, 12.5 centimeters long. (By the way, just for comparison, the wavelength of light is around 400-700 nanometers, or 500,000 times shorter than a wifi signal. That comes out to 600 Terahertz or so. But all the same rules apply.)

The reason I bring up λ is that we're going to have multiple transmitters. Modern wifi devices have multiple transmit antennas so they can do various magic, which I will try to explain later. Also, inside a room, signals can reflect off the walls, which is a bit like having additional transmitters.

Let's imagine for now that there are no reflections, and just two transmit antennas, spaced some distance apart on the x axis. If you are a receiver also sitting on the x axis, then what you see is two signals:

- cos (ω t) + cos (ω t + φ)

Where φ is the phase difference (between 0 and 2π). The phase difference can be calculated from the distance between the two antennas, r, and λ, as follows:

- φ = r / λ

Of course, a single-antenna receiver can't actually see two signals. That's where the trig identities come in.

Constructive Interference

Let's do some simple ones first. If r = λ, then φ = 2π, so:

- cos (ω t) + cos (ω t + 2π)

= cos (ω t) + cos (ω t)

= 2 cos (ω t)

That one's pretty intuitive. We have two antennas transmitting the same signal, so sure enough, the receiver sees a signal twice as tall. Nice.

Destructive Interference

The next one is weirder. What if we put the second transmitter 6.25cm away, which is half a wavelength? Then φ = π, so:

- cos (ω t) + cos (ω t + π)

= cos (ω t) - cos (ω t)

= 0

The two transmitters are interfering with each other! A receiver sitting on the x axis (other than right between the two transmit antennas) won't see any signal at all. That's a bit upsetting, in fact, because it leads us to a really pivotal question: where did the energy go?

We'll get to that, but first things need to get even weirder.

Orthogonal Carriers

Let's try φ = π/2.

- cos (ω t) + cos (ω t + π/2)

= cos (ω t) - sin (ω t)

This one is hard to explain, but the short version is, no matter how much you try, you won't get that to come out to a single (Edit 2014/07/29: non-phase-shifted) cos or sin wave. Symbolically, you can only express it as the two separate factors, added together. At each point, the sum has a single value, of course, but there is no formula for that single value which doesn't involve both a cos ωt and a sin ωt. This happens to be a fundamental realization that leads to all modern modulation techniques. Let's play with it a little and do some simple AM radio (amplitude modulation). That means we take the carrier wave and "modulate" it by multiplying it by a much-lower-frequency "baseband" input signal. Like so:

- f(t) cos (ω t)

Where ω >> 1, so that for any given cycle of the carrier wave, f(t) can be assumed to be "almost constant."

On the receiver side, we get the above signal and we want to discover the value of f(t). What we do is multiply it again by the carrier:

- f(t) cos (ω t) cos (ω t)

= f(t) cos2 (ω t)

= f(t) (1 - sin2 (ω t))

= ½ f(t) (2 - 2 sin2 (ω t))

= ½ f(t) (1 + (1 - 2 sin2 (ω t)))

= ½ f(t) (1 + cos (2 ω t))

= ½ f(t) + ½ f(t) cos (2 ω t)

See? Trig identities. Next we do what we computer engineers call a "dirty trick" and, instead of doing "real" math, we'll just hypothetically pass the resulting signal through a digital or analog filter. Remember how we said f(t) changes much more slowly than the carrier? Well, the second term in the above answer changes twice as fast as the carrier. So we run the whole thing through a Low Pass Filter (LPF) at or below the original carrier frequency, removing high frequency terms, leaving us with just this:

- (...LPF...)

→ ½ f(t)

Which we can multiply by 2, and ta da! We have the original input signal.

Now, that was a bit of a side track, but we needed to cover that so we can do the next part, which is to use the same trick to demonstrate how cos(ω t) and sin(ω t) are orthogonal vectors. That means they can each carry their own signal, and we can extract the two signals separately. Watch this:

- [ f(t) cos (ω t) +

g(t) sin (ω t) ] cos (ω t)

= [f(t) cos2 (ω t)] + [g(t) cos (ω t) sin (ω t)]

= [½ f(t) (1 + cos (2 ω t))] + [½ g(t) sin (2 ω t)]

= ½ f(t) + ½ f(t) cos (2 ω t) + ½ g(t) sin (2 ω t)

[...LPF...]

→ ½ f(t)

Notice that by multiplying by the cos() carrier, we extracted just f(t). g(t) disappeared. We can play a similar trick if we multiply by the sin() carrier; f(t) then disappears and we have recovered just g(t).

In vector terms, we are taking the "dot product" of the combined vector with one or the other orthogonal unit vectors, to extract one element or the other. One result of all this is you can, if you want, actually modulate two different AM signals onto exactly the same frequency, by using the two orthogonal carriers.

QAM

But treating it as just two orthogonal carriers for unrelated signals is a little old fashioned. In modern systems we tend to think of them as just two components of a single vector, which together give us the "full" signal. That, in short, is QAM, one of the main modulation methods used in 802.11n. To oversimplify a bit, take this signal:

- f(t) cos (ω t) + g(t) sin (ω t)

And let's say f(t) and g(t) at any given point in time each have a value that's one of: 0, 1/3, 2/3, or 1. Since each function can have one of four values, there are a total of 4*4 = 16 different possible combinations, which corresponds to 4 bits of binary data. We call that encoding QAM16. If we plot f(t) on the x axis and g(t) on the y axis, that's called the signal "constellation."

Anyway we're not attempting to do QAM right now. Just forget I said anything.

Adding out-of-phase signals

Okay, after all that, let's go back to where we started. We had two transmitters both sitting on the x axis, both transmitting exactly the same signal cos(ω t). They are separated by a distance r, which translates to a phase difference φ. A receiver that's also on the x axis, not sitting between the two transmit antennas (which is a pretty safe assumption) will therefore see this:

- cos (ω t) + cos (ω t + φ)

= cos (ω t) + cos (ω t) cos φ - sin (ω t) sin φ

= (1 + cos φ) cos (ω t) - (sin φ) sin (ω t)

One way to think of it is that a phase shift corresponds to a rotation through the space defined by the cos() and sin() carrier waves. We can rewrite the above to do this sort of math in a much simpler vector notation:

- [1, 0] + [cos φ, sin φ]

= [1+cos φ, sin φ]

This is really powerful. As long as you have a bunch of waves at the same frequency, and each one is offset by a fixed amount (phase difference), you can convert them each to a vector and then just add the vectors linearly. The result, the sum of these vectors, is what the receiver will see at any given point. And the sum can always be expressed as the sum of exactly one cos(ω t) and one sin(ω t) term, each with its own magnitude.

This leads us to a very important conclusion:

- The sum of reflections of a signal is just an

arbitrarily phase shifted and scaled version of the original.

People worry about reflections a lot in wifi, but because of this rule, they are not, at least mathematically, nearly as bad as you'd think.

Of course, in real life, getting rid of that phase shift can be a little tricky, because you don't know for sure by how much the phase has been shifted. If you just have two transmitting antennas with a known phase difference between them, that's one thing. But when you add reflections, that makes it harder, because you don't know what phase shift the reflections have caused. Not impossible: just harder.

(You also don't know, after all that interference, what happened to the amplitude. But as we covered last time, the amplitude changes so much that our modulation method has to be insensitive to it anyway. It's no different than moving the receiver closer or further away.)

Phasor Notation

One last point. In some branches of eletrical engineering, especially in analog circuit analysis, we use something called "phasor notation." Basically, phasor notation is just a way of representing these cos+sin vectors using polar coordinates instead of x/y coordinates. That makes it easy to see the magnitude and phase shift, although harder to add two signals together. We're going to use phasors a bit when discussing signal power later.

Phasors look like this in the general case:

- A cos (ω t) + B sin (ω t)

= [A, B]

- Magnitude = M = (A2 +

B2)½

tan (Phase) = tan φ = B / A

φ = atan2(B, A)

= M∠φ

or the inverse:

- M∠φ

= [M cos φ, M sin φ]

= (M cos φ) cos (ω t) - (M sin φ) sin (ω t)

= [A, B]

= A cos (ω t) + B sin (ω t)

Imaginary Notation

There's another way of modeling the orthogonal cos+sin vectors, which is to use complex numbers (ie. a real axis, for cos, and an imaginary axis, for sin). This is both right and wrong, as imaginary numbers often are; the math works fine, but perhaps not for any particularly good reason, unless your name is Euler. The important thing to notice is that all of the above works fine without any imaginary numbers at all. Using them is a convenience sometimes, but not strictly necessary. The value of cos+sin is a real number, not a complex number.

Epilogue

Next time, we'll talk about signal power, and most importantly, where that power disappears to when you have destructive interference. And from there, as promised last time, we'll cheat Shannon's Law.

Why would you follow me on twitter? Use RSS.